Kuwa v0.4.0 now includes support for Qualcomm's Neural Processing Unit (NPU), contributed by Thuniverse AI Inc. This integration delivers significantly faster generation speeds while minimizing power consumption. Because Kuwa can't reliably detect NPU availability on all systems, this feature is not enabled by default. Follow these steps to enable Qualcomm NPU support:

Kuwa v0.4.0 now includes support for Qualcomm's Neural Processing Unit (NPU), contributed by Thuniverse AI Inc. This integration delivers significantly faster generation speeds while minimizing power consumption. Because Kuwa can't reliably detect NPU availability on all systems, this feature is not enabled by default. Follow these steps to enable Qualcomm NPU support:

19 posts tagged with "KuwaOS"

View All TagsIntroduction to Kuwa GenAI OS v0.4.0

Kuwa GenAI OS is an open, free, secure, and privacy-focused AI orchestrating system. Kuwa delivers a user-centric interface for generative AI, facilitating interaction with a wide range of models, agents, executables, scripts, tools, weblets, and applications. The orchestration platform facilitates the integration of multiple models and bots in a no-code or low-code setting, thereby streamlining the execution of sophisticated tasks.

Kuwa offers a comprehensive solution for multilingual, multi-model development and deployment—empowering individuals and businesses to harness generative AI, build applications, launch online stores, and deliver external services across local laptops, on-premise servers, or in the cloud.

In Taiwan’s Siraya language, Kuwa refers to a public hall that served as a gathering place for meetings, discussions, and decision-making.

Here's a summary of Kuwa GenAI OS's features:

Groq Tutorial

Kuwa v0.3.4 provides a feature to customize third-party API keys, allowing users to connect to APIs other than Gemini and ChatGPT. Many users find it difficult to run large models like Llama3.1 70B locally, and Groq offers a free cloud API to use these large models. This article will explain how to connect Groq API in Kuwa v0.3.4.

Applying for a Groq API Key

- Log in to Groq Cloud

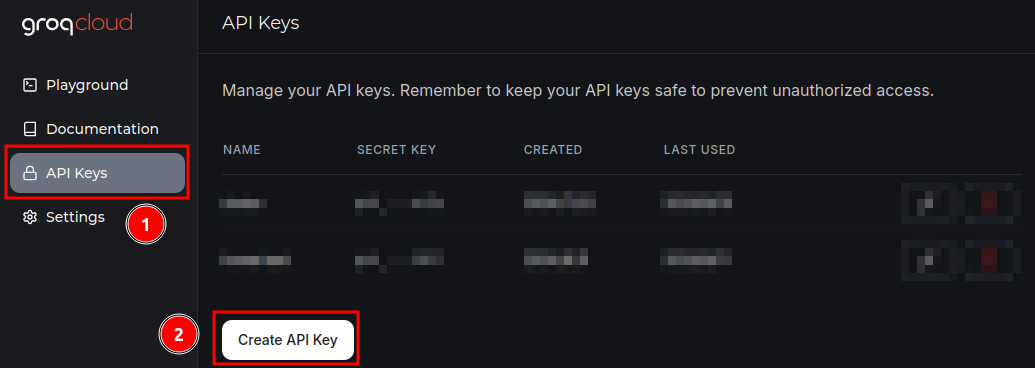

- Create a new API key

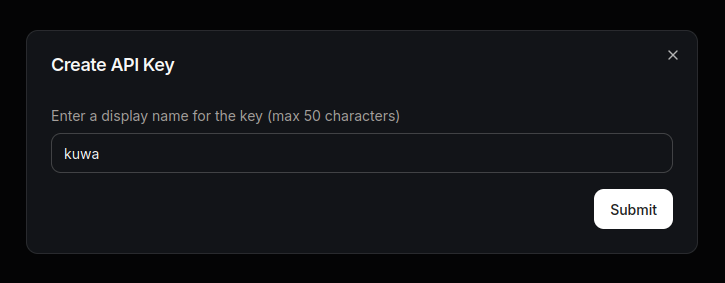

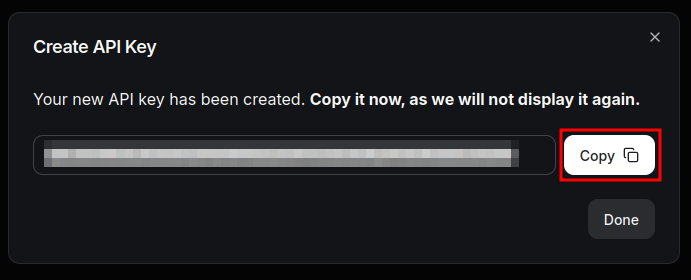

Enter a memorable API key name and copy the generated API key.

Be sure to save the API key, as it will not be displayed again after the dialog box closes.

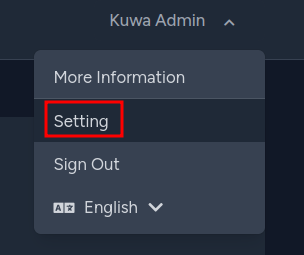

Configuring Kuwa

- Enter the API Key

Please enter the API key obtained in the previous step in "User Settings > API Management > Custom Third-Party API Key".

- Use the Model

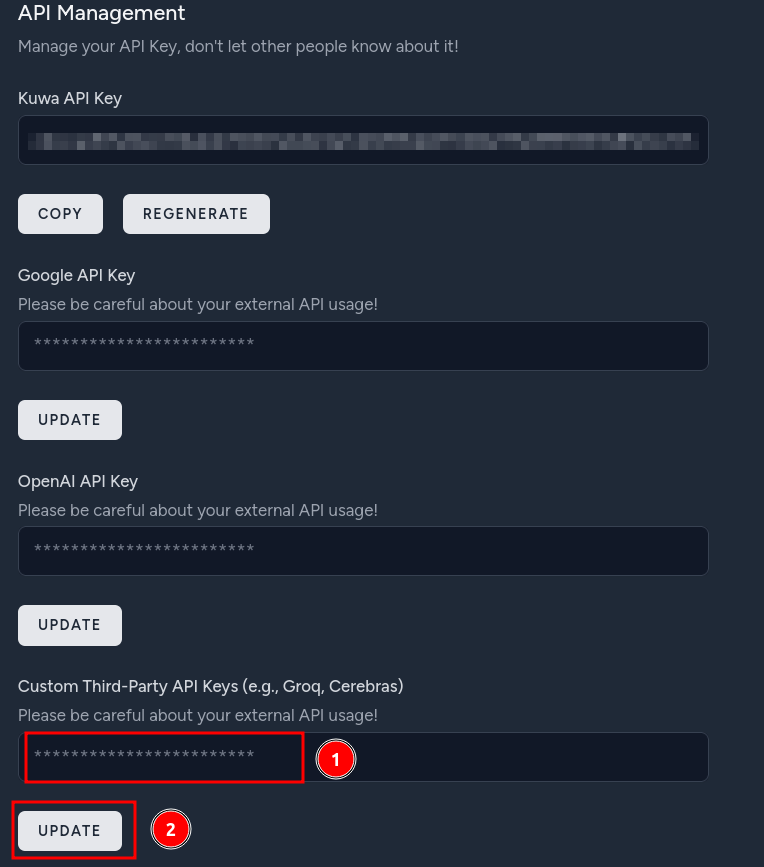

v0.3.4 comes with a pre-built model called "Llama3.1 70B (Groq API)".

After entering the Groq API key, you can use this model.

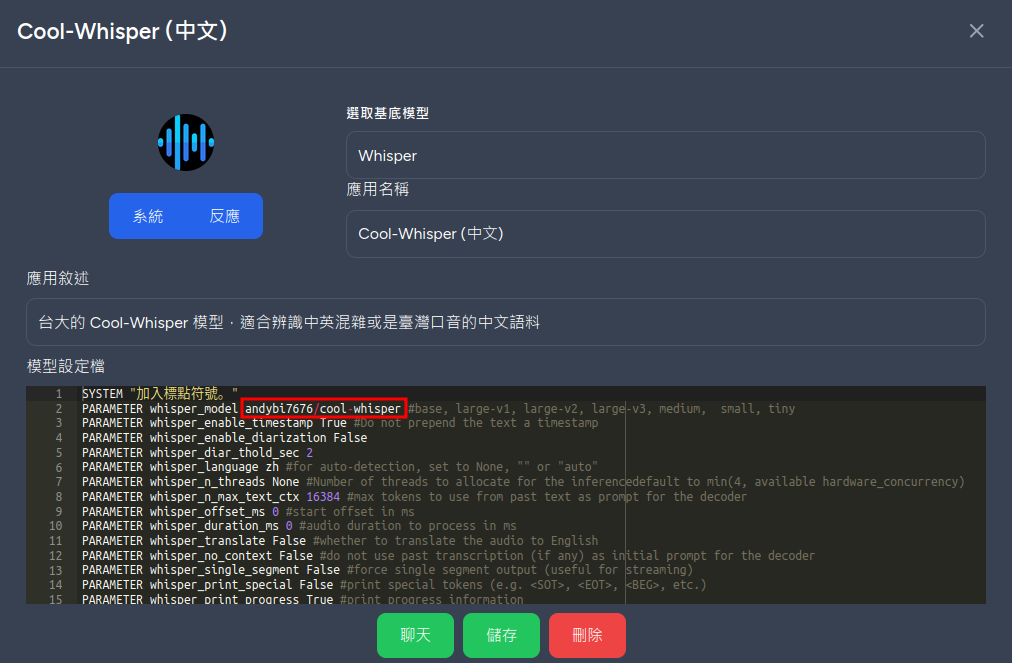

Cool-Whisper Tutorial

National Taiwan University's Liang-Hsuan Tseng and NTU COOL team released the Cool-Whisper model last night (7/17), which is suitable for recognizing Taiwanese pronunciation Chinese or mixed Chinese-English audio files.

Kuwa can directly apply it by simply modifying the Modelfile.

The model was temporarily taken offline around 12:00 on 7/18 due to privacy concerns.

Friends who want to use this model can continue to follow its HuggingFace Hub and use it once it is re-released.

Setup Steps

-

Refer to the Whisper setup tutorial to start the Whisper executor

- The Cool-Whisper model is approximately 1.5 GB in size and will occupy up to 10 GB of VRAM during execution

-

Create a new bot named Cool-Whisper in the store, select Whisper as the base model, and fill in the following model settings file, focusing on the

PARAMETER whisper_model andybi7676/cool-whisperparameterSYSTEM "加入標點符號。"

PARAMETER whisper_model andybi7676/cool-whisper #base, large-v1, large-v2, large-v3, medium, small, tiny

PARAMETER whisper_enable_timestamp True #Do not prepend the text a timestamp

PARAMETER whisper_enable_diarization False

PARAMETER whisper_diar_thold_sec 2

PARAMETER whisper_language zh #for auto-detection, set to None, "" or "auto"

PARAMETER whisper_n_threads None #Number of threads to allocate for the inferencedefault to min(4, available hardware_concurrency)

PARAMETER whisper_n_max_text_ctx 16384 #max tokens to use from past text as prompt for the decoder

PARAMETER whisper_offset_ms 0 #start offset in ms

PARAMETER whisper_duration_ms 0 #audio duration to process in ms

PARAMETER whisper_translate False #whether to translate the audio to English

PARAMETER whisper_no_context False #do not use past transcription (if any) as initial prompt for the decoder

PARAMETER whisper_single_segment False #force single segment output (useful for streaming)

PARAMETER whisper_print_special False #print special tokens (e.g. <SOT>, <EOT>, <BEG>, etc.)

PARAMETER whisper_print_progress True #print progress information

PARAMETER whisper_print_realtime False #print results from within whisper.cpp (avoid it, use callback instead)

PARAMETER whisper_print_timestamps True #print timestamps for each text segment when printing realtime

PARAMETER whisper_token_timestamps False #enable token-level timestamps

PARAMETER whisper_thold_pt 0.01 #timestamp token probability threshold (~0.01)

PARAMETER whisper_thold_ptsum 0.01 #timestamp token sum probability threshold (~0.01)

PARAMETER whisper_max_len 0 #max segment length in characters

PARAMETER whisper_split_on_word False #split on word rather than on token (when used with max_len)

PARAMETER whisper_max_tokens 0 #max tokens per segment (0 = no limit)

PARAMETER whisper_speed_up False #speed-up the audio by 2x using Phase Vocoder

PARAMETER whisper_audio_ctx 0 #overwrite the audio context size (0 = use default)

PARAMETER whisper_initial_prompt None #Initial prompt, these are prepended to any existing text context from a previous call

PARAMETER whisper_prompt_tokens None #tokens to provide to the whisper decoder as initial prompt

PARAMETER whisper_prompt_n_tokens 0 #tokens to provide to the whisper decoder as initial prompt

PARAMETER whisper_suppress_blank True #common decoding parameters

PARAMETER whisper_suppress_non_speech_tokens False #common decoding parameters

PARAMETER whisper_temperature 0.0 #initial decoding temperature

PARAMETER whisper_max_initial_ts 1.0 #max_initial_ts

PARAMETER whisper_length_penalty -1.0 #length_penalty

PARAMETER whisper_temperature_inc 0.2 #temperature_inc

PARAMETER whisper_entropy_thold 2.4 #similar to OpenAI's "compression_ratio_threshold"

PARAMETER whisper_logprob_thold -1.0 #logprob_thold

PARAMETER whisper_no_speech_thold 0.6 #no_speech_thold

-

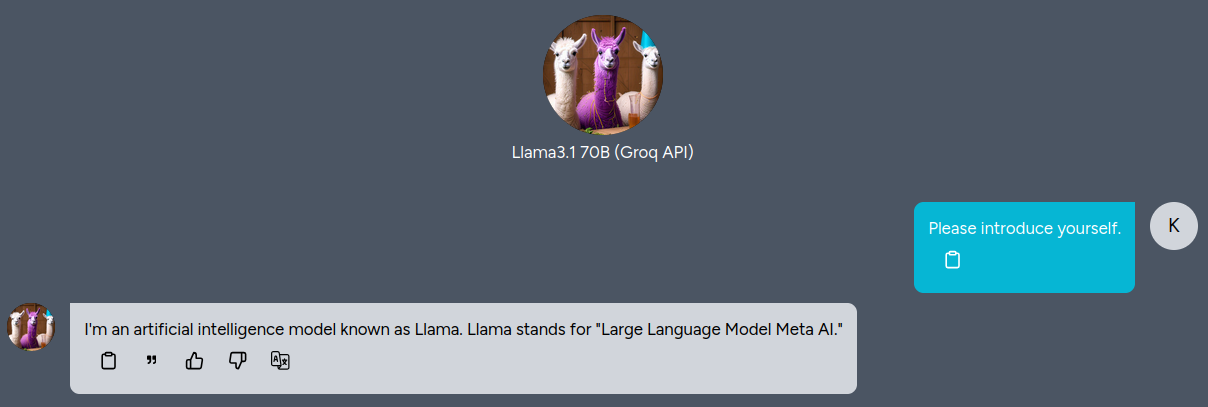

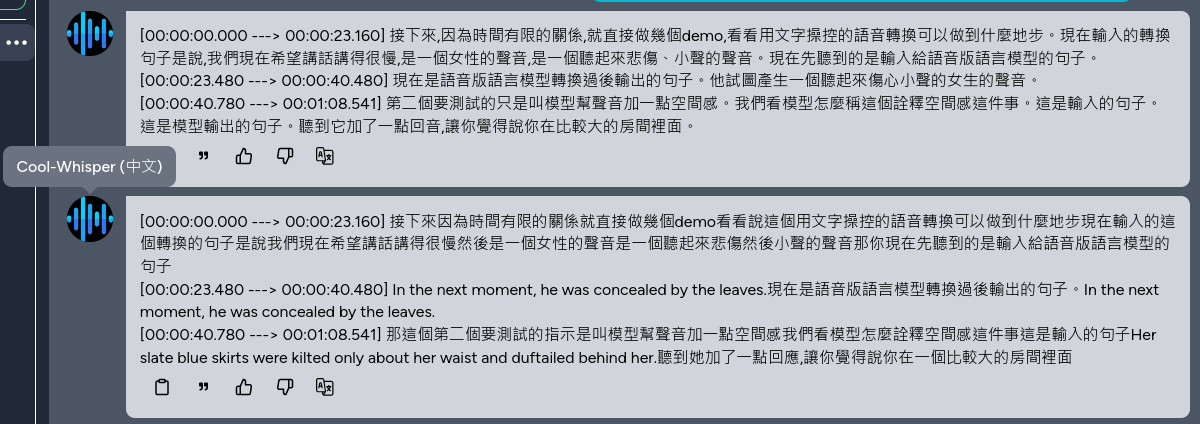

You can now use the Cool-Whisper model for speech recognition. The following figure shows the use of Whisper and Cool-Whisper for recognizing mixed Chinese-English audio files, which can accurately recognize mixed Chinese-English scenarios

References

RAG Custom Parameters Tutorial

Kuwa's RAG application (DocQA/WebQA/DatabaseQA/SearchQA) supports customization of advanced parameters through the Bot's model file starting from version v0.3.1, allowing a single Executor to be virtualized into multiple RAG applications. Detailed parameter descriptions and examples are as follows.

Parameter Description

The following parameter contents are the default values for the v0.3.1 RAG application.

Shared Parameters for All RAGs

PARAMETER retriever_embedding_model "thenlper/gte-base-zh" # Embedding model name

PARAMETER retriever_mmr_fetch_k 12 # MMR fetch k chunks

PARAMETER retriever_mmr_k 6 # MMR fetch k chunks

PARAMETER retriever_chunk_size 512 # Length of each chunk in characters (not restricted for DatabaseQA)

PARAMETER retriever_chunk_overlap 128 # Overlap length between chunks in characters (not restricted for DatabaseQA)

PARAMETER generator_model None # Specify which model to answer, None means auto-selection

PARAMETER generator_limit 3072 # Length limit of the entire prompt in characters

PARAMETER display_hide_ref False # Do not show references

DocQA, WebQA, SearchQA Specific Parameters

PARAMETER crawler_user_agent "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.0.0 Safari/537.36" # Crawler UA string

SearchQA Specific Parameters

PARAMETER search_advanced_params "" # Advanced search parameters (SearchQA only)

PARAMETER search_num_url 3 # Number of search results to retrieve [1~10] (SearchQA only)

DatabaseQA Specific Parameters

PARAMETER retriever_database None # Path to vector database on local Executor

Usage Example

Suppose you want to create a DatabaseQA knowledge base and specify a model to answer, you can create a Bot,

select DocQA as the base model, and fill in the following Modelfile.

PARAMETER generator_model "model_access_code" # Specify which model to answer, None means auto-selection

PARAMETER generator_limit 3072 # Length limit of the entire prompt in characters

PARAMETER retriever_database "/path/to/local/database/on/executor" # Path to vector database on local Executor

Tool Development Tutorial

Kuwa is designed to support the connection of various non-LLM tools. The simplest tool can refer to src/executor/debug.py. The following is a content description.

import os

import sys

import asyncio

import logging

import json

sys.path.append(os.path.dirname(os.path.abspath(__file__)))

from kuwa.executor import LLMExecutor, Modelfile

logger = logging.getLogger(__name__)

class DebugExecutor(LLMExecutor):

def __init__(self):

super().__init__()

def extend_arguments(self, parser):

"""

Override this method to add custom command-line arguments.

"""

parser.add_argument('--delay', type=float, default=0.02, help='Inter-token delay')

def setup(self):

self.stop = False

async def llm_compute(self, history: list[dict], modelfile:Modelfile):

"""

Responsible for handling the requests, the input is chat history (in

OpenAI format) and parsed Modelfile (you can refer to

`genai-os/src/executor/src/kuwa/executor/modelfile.py`), it will return an

Asynchronous Generators to represent the output stream.

"""

try:

self.stop = False

for i in "".join([i['content'] for i in history]).strip():

yield i

if self.stop:

self.stop = False

break

await asyncio.sleep(modelfile.parameters.get("llm_delay", self.args.delay))

except Exception as e:

logger.exception("Error occurs during generation.")

yield str(e)

finally:

logger.debug("finished")

async def abort(self):

"""

This method is invoked when the user presses the interrupt generation button.

"""

self.stop = True

logger.debug("aborted")

return "Aborted"

if __name__ == "__main__":

executor = DebugExecutor()

executor.run()

Introduction to Kuwa GenAI OS

Kuwa GenAI OS is a free, open, secure, and privacy-focused open-source system that provides a user-friendly interface for generative AI and a new-generation generative AI orchestrator system that supports rapid development of LLM applications. Kuwa provides an end-to-end solution for multilingual and multi-model development and deployment, empowering individuals and industries to use generative AI on local laptops, servers or the cloud, develop applications, or open stores and provide services externally. Here is a brief description of Kuwa GenAI OS:

Usage Environment

- Supports multiple operating systems including Windows, Linux, and MacOS, and provides easy installation and software update tools, such as a single installation executable for Windows, an automatic installation script for Linux, a Docker startup script, and a pre-installed VM virtual machine.

- Supports a variety of hardware environments, from Raspberry Pi, laptops, personal computers, and on-premises servers to virtual hosts, public and private clouds, with or without GPU accelerators.

User Interface

- The integrated interface can select any model, knowledge base, or GenAI application, and combine them to create single or group chat rooms.

- The chat room can be self-directed, citing dialogue, specifying group chat or direct private chat, switching between continuous Q&A mode or single-question Q&A mode

- Controllable crossings at any time, import prompt scripts or upload files, you can also export complete chat room conversation scripts, directly output files in formats such as PDF, Doc/ODT, plain text, or share web pages

- Supports text, image generation, speech, and visual recognition multimodal language models, and can highlight syntax such as programming and Markdown, or quickly use system gadgets.

Development Interface

- Users can skip coding by connecting existing models, knowledge bases, or Bot applications, adjusting system prompts and parameters, presetting scenarios, or creating prompt templates to create personalized or more powerful GenAI applications.

- Users can create their own knowledge base by simple drag and drop, or import existing vector databases, and can use multiple knowledge bases for GenAI applications at the same time.

- Users can create and maintain their own shared app Store, and users can also share bot apps

- The Kuwa extension model and RAG advanced functions can be adjusted and enabled through the Ollama modelfile.

Deployment Interface

- Supports multiple languages, can customize the interface and messages, and directly provide services for external deployment.

- Existing accounts can be connected or registered with an invitation code. When the password is forgotten, it can be reset with Email.

- System settings can modify system announcements, terms of service, warnings, etc., or perform group permission management, user management, model management, etc.

- The dashboard supports feedback management, system log management, security and privacy management, message query, etc.

Development Environment

- Integrates a variety of open-source generative AI tools, including Faiss, HuggingFace, Langchain, llama.cpp, Ollama, vLLM, and various Embedding and Transformer-related packages. Developers can download, connect, and develop various multimodal LLMs and applications.

- RAG Toolchain includes multiple search-augmented generation application tools such as DBQA, DocumentQA, WebQA, and SearchQA, which can be connected with search engines and automatic crawlers, or integrated with existing corporate databases or systems, facilitating the development of advanced customized applications.

- Open source allows developers to create their own custom systems based on their own needs.

Stable Diffusion Image Generation Model Building Tutorial

Kuwa v0.3.1 adds Kuwa Painter based on the Stable Diffusion image generation model,

You can generate an image by inputting a text, or upload an image and generate an image with a text.

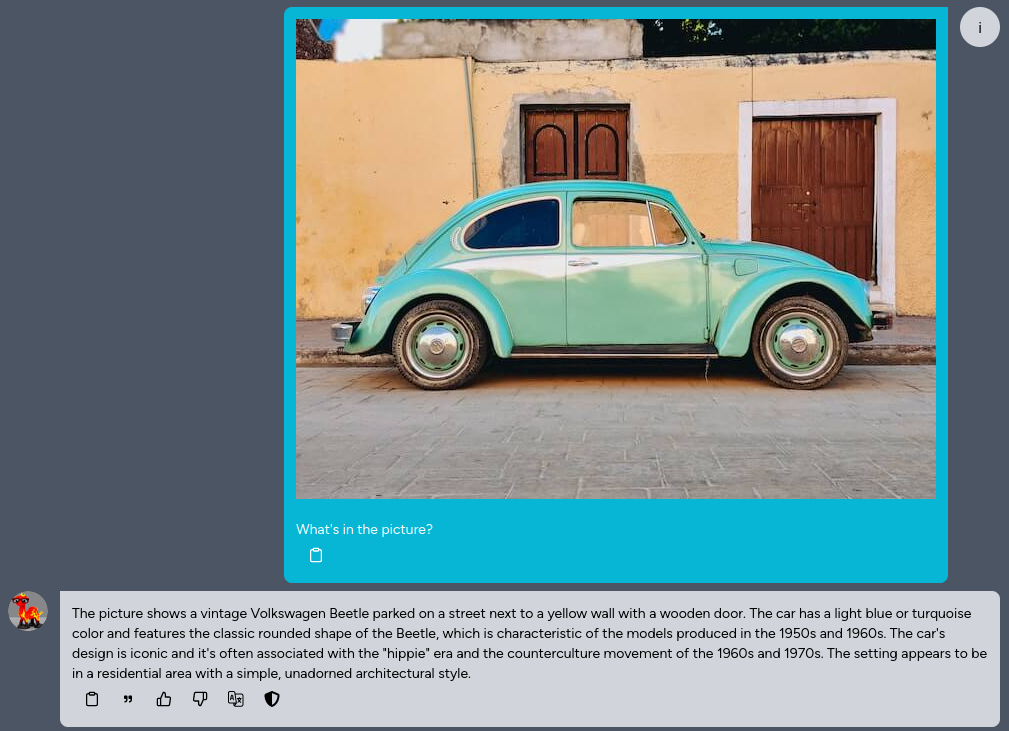

Visual-Language Model Setup Tutorial

Kuwa v0.3.1 has preliminary support for commonly used visual language models (VLMs). In addition to text inputs, such models can also take images as input and respond to user instructions based on the content of the images. This tutorial will guide you through the initial setup and usage of VLMs.

Whisper Setup Tutorial

Kuwa v0.3.1 adds Kuwa Speech Recognizer based on the Whisper speech recognition model, which can generate transcripts by uploading audio files, supporting timestamps and speaker labels.

Known Issues and Limitations

Hardware requirements

The default Whisper medium model is used with speaker diarization disabled. The VRAM consumption on GPU is shown in the following table.

| Model Name | Number of parameters | VRAM requirement | Relative recognition speed |

|---|---|---|---|

| tiny | 39 M | ~1 GB | ~32x |

| base | 74 M | ~1 GB | ~16x |

| small | 244 M | ~2 GB | ~6x |

| medium | 769 M | ~5 GB | ~2x |

| large | 1550 M | ~10 GB | 1x |

| pyannote/speaker-diarization-3.1 (Speaker Diarization) | - | ~3GB | - |

Known limitations

- Currently, the input language cannot be detected automatically and must be specified manually.

- Currently, the speaker identification module is multi-threaded, causing the model to be reloaded each time, resulting in a longer response time.

- Content is easily misjudged when multiple speakers speak at the same time.